The rapid progress in large language models (LLMs) and their widespread accessibility have sparked enthusiasm and concern in the scientific community. Ethical and policy experts in artificial intelligence highlight the need for careful and responsible use of these technologies to maintain scientific integrity and trust.

LLMs, which emerged around 2017, are deep learning models trained unsupervised on extensive text data, boasting a growing number of parameters and capabilities. The debate over their application in science intensified in late 2021 following the increased availability of LLM tools capable of generating and editing scientific content or answering research questions.

How can LLMs account for outdated or incorrect knowledge in the published literature?

Concerns about LLMs have garnered attention from scientists, the public, journalists, and lawmakers. These models are often touted as revolutionary, potentially reshaping everything from information search to art creation and scientific research. However, many assertions about their capabilities must be substantiated, leaving critics to challenge these claims. Despite tangible adverse effects on marginalized groups, discussions about LLMs often overlook critical issues like responsibility, accountability, and labor exploitation, focusing instead on abstract debates about their intelligence and moral status.

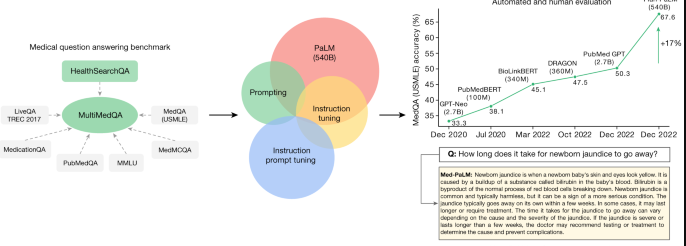

One major challenge for LLMs in science and regenerative medicine is addressing outdated or incorrect information in published literature. Generative AI, including models that produce more than text, poses significant disruptions in various fields. In science, domain expertise is especially crucial for identifying when these models present false information as fact.

Historically, disruptive technologies have constantly stirred great hopes and fears. For instance, the printing press, automobiles, and telephones were initially met with skepticism and suspicion. While some concerns were unfounded, unforeseen risks did emerge, such as the environmental impact of cars. Predicting the long-term effects of such technologies is challenging, and the ecological footprint of modern AI technologies is an urgent issue needing attention.

How do we trust science in the age of LLMs?

LLMs and related technologies will play a crucial role in future discoveries for science. Researchers must approach these tools cautiously, adhering to scientific rigor and methods. As sequence predictors, LLMs lack the ability to understand and contribute meaningfully to scientific discourse, as they do not possess the human-like qualities necessary for scientific inquiry.

Three main concerns arise with LLMs in science:

- They may need to grasp the nuanced value judgments in scientific writing, potentially oversimplifying complex research.

- LLMs can generate false content, as seen with Meta’s Galactica, which was quickly withdrawn due to inaccuracies.

- Using LLMs in peer-review processes can threaten the trust in this critical scientific function.

Ultimately, LLMs are tools and should not replace human scientists. They lack the subjective perspectives and responsibilities inherent in scientific research. As we embrace these technologies, scientists must ensure their responsible and ethical use, mainly as we are still early in the life cycle of GenAI.

Integration of LLMs into scientific practice must be handled responsibly. Collaboration across scientific communities is necessary to develop best practices and standards. Researchers should view LLMs as aids in scientific exploration, not as autonomous creators of knowledge.

What is the role of the scientist in the age of LLMs?

As the scientific community continues to utilize LLMs, it’s essential to prioritize research on reliable detectors and adapt practices in consultation with AI ethics experts. The role of the scientist in this era is crucial, requiring vocal advocacy for responsible technology use and interdisciplinary collaboration.

In summary, while LLMs and GenAI offer exciting possibilities, their integration into scientific practice demands careful consideration, ethical responsibility, and continuous reevaluation to ensure they enhance, rather than undermine, scientific rigor and discovery.